I’m watching a machine learning talk by François Chollet that started off by proving that Large Language Models (LLMs) are not great at reasoning tasks. It then compares how these LLMs are similar to the perception/intuition thinking humans do.

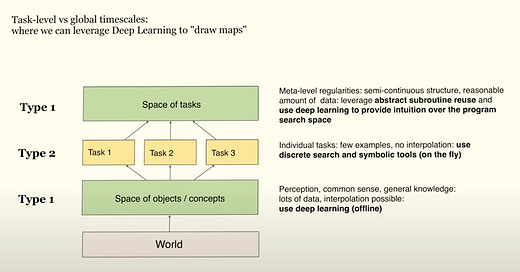

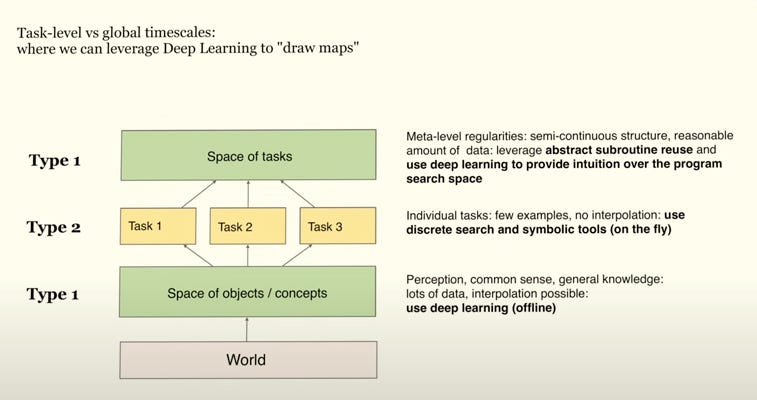

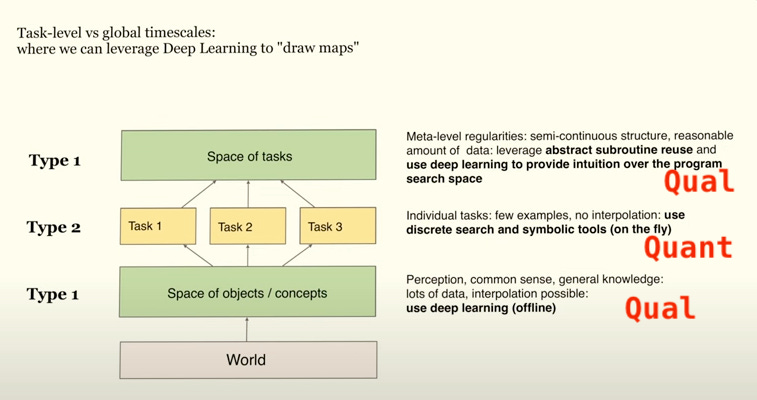

At around the 41:08 mark, François showed this slide.

François used the Type1/Type2 architecture made famous by Kahneman as an analogy with LLM (Type 1) and symbolic tools (Type 2).

I’m mentioning this because I came across this other post recently about consulting.

In case the embed doesn’t work, it says BCG taught the author

the technique to break down a hard problem as Qual > Quant > Qual. Which is a parellel to François’ point of sandwiching Type 2 reasoning with Type 1 deep learning.If you’re familiar with the general idea of LLM, that to build up a good Type 1 system, you need lots of data. It’s as if quantity at a lower-level (data) generates quality at a higher level (intuition/perception) in a hierarchy.

For Type 2, you need to spend a sufficient quantity of time. Either to perform the discrete search during the problem solving, or to even learn the skills associated. For example, it’s arguable we put people through formal school for years essentially to learn all the Type 2 reasoning skills and habits.

Let me know if you see any other fields where the same pattern applies